I was lucky to be one of the 150-200 people to attend the Google Webmaster Conference hosted in one of Google‘s Zurich offices on Wednesday, 11th of December, and so I thought I’d write up a blog post on some notes I’d taken and post up some of the pictures I had taken at the event.

It was also a really good experience with speaking to others who work in the industry and I have undoubtedly created a lot of new connections and hopefully gained new like-minded friends who do the sort of stuff I do.

The official Googlers speaking or in attendance of the event were John Mueller, Daniel Waisberg & Martin Splitt, Gary Illyes and Lizzy Harvey.

And the community speakers were Tobias Willman, Aleksej Dix and Izzi Smith.

Housekeeping

Google opened up the presentation with some housekeeping and some information on how they can’t give out advice for specific websites. This is important to note, as any question you ask will be answered in a more general manner. This has been, historically speaking, their policy for some time and they typically will not give specific feedback unless they think it benefits more than a single website:

Google Search Console – Daniel Waisberg

Daniel is a Search Advocate who works on the Google Search Console product. The Google Search Console product team appears to be based out in Tel Aviv. Daniel is well-known for the work he’s done at Google on the Google Analytics product and is the author of Google Analytics Integrations.

In his slides, he details the purpose of the mission of Google Search Console, updates and information on new features they have rolled out this year – and how they got there.

- Speed of Google Search Console data processing increased 10x due to engineering developments, hence they were able to give webmasters access to 16 months of data and more recent data

- They send millions of emails per month to webmasters in an attempt to try and help webmasters fix problems

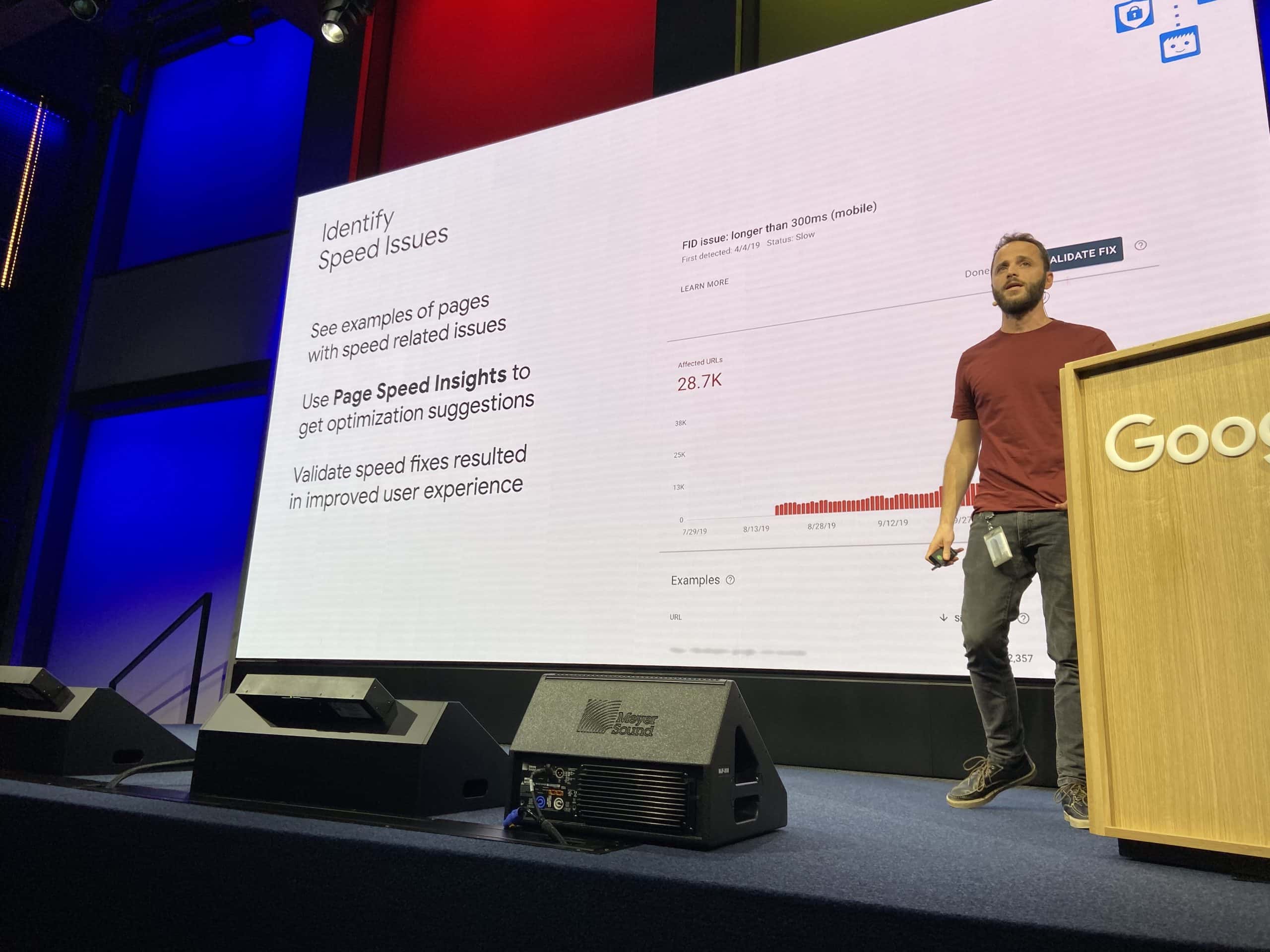

- It was highlighted that the newly created ‘Speed report’ in Google Search Console was extremely complex as they were taking data from the Chrome User Experience (CrUX) report, and worked “across departments” to get it working

- Purpose of the speed report is to give speed optimisation suggestions and to validate fixes

- Launched the ‘alerts’ and ‘messages’ last week (w/c 2nd of Dec) to help webmasters keep track of issues and alert webmasters to problems impacting their website on search

- Some of the old Google Seach Console features will go away, some will be moved over, but might not exactly be 1:1 moves and will look to try and be improvements (follow up by John Mueller later)

- Imanaged to quickly catch up with him separately regarding BigQuery and Google Analytics afterwards, as an audience member had asked the question around natively integrating BigQuery into Google Search Console. I briefly mentioned Supermetrics to him as a connector that could be used for such purpose to connect to BigQuery (but obviously a direct connector would be way more accessible).

The mission of Google Search Console:

Google Search News Update – John Mueller

- The Google Webmaster Trends’ goal & mission is to ensure websites are successful on Google

- Anecdote: Cheese comes in all shapes and sizes (as with a lot of websites), but if you want the best cheese – you need great high-quality ingredients (or something to that extent) – either way, it’s pretty clear that John loves cheese; I mean, who doesn’t?

Other topics presented by John:

Mega Menus

Mega menus

- Create a clear structure (top, category – detail)

- Big menus are not necessarily bad

- No need to hide links, can use nofollow if needed

- Focus on usability

- Don’t copy other websites

Pagination without rel next/prev

Pagination without rel next/prev

- Link naturally between pages (in a way that the internal links are indexable)

- Use clean URLs (don’t use parameters)

- Use a local crawler (Screaming Frog, Site Bulb etc)

Canonicalisation

Canonicalisation

- Canonicalisation uses more than rel=”canonical”

- Redirects

- Internal and external links

- Sitemaps and hreflang

- Cleaner URLs, HTTPS, preferred domain setting

What did Google remove/add in 2019?

Removed:

- Support for flash

- rel=next, rel=prev (Google sees websites already doing this correctly, so no need to do anything special for Google)

- DMOZ/ODP went away

- Noindex in robots.txt

- Google link: and info: operators

Added:

- Introduced favicons

- Better preview/ snippet controls (schema)

- rel=”nofollow” as a hint

- Added rel=”sponsored” and rel=”ugc”

- Discover / Google Feed

- Introduced a robots.txt standard

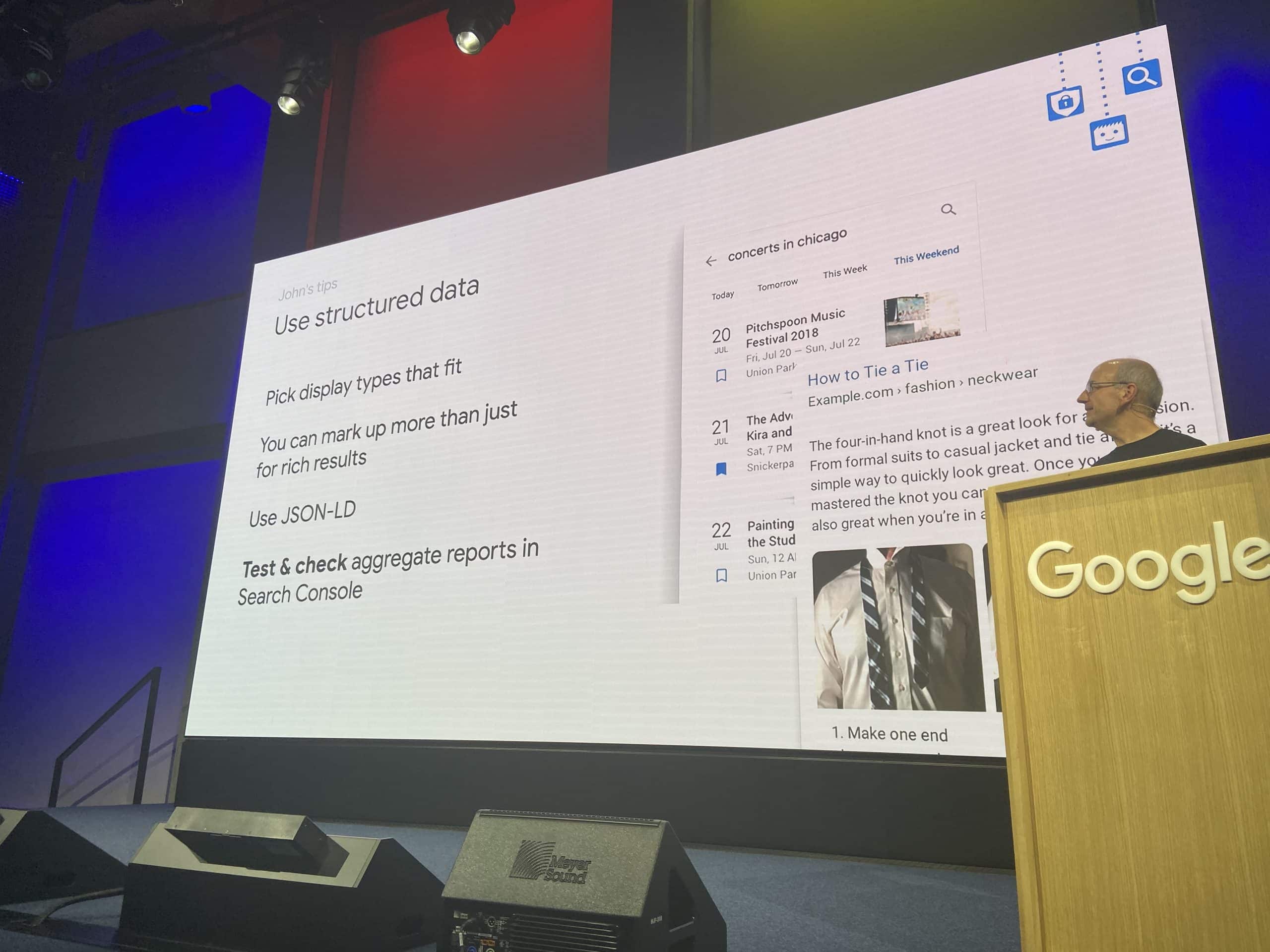

Use structured data

- Lizzy Harvey wrote up all of the structured data documentation

- Google looking to do more in this space and will be making further structured data enhancements for 2020

- Structured data is good for giving even more context to a page (outside of pure direct impact to search snippets)

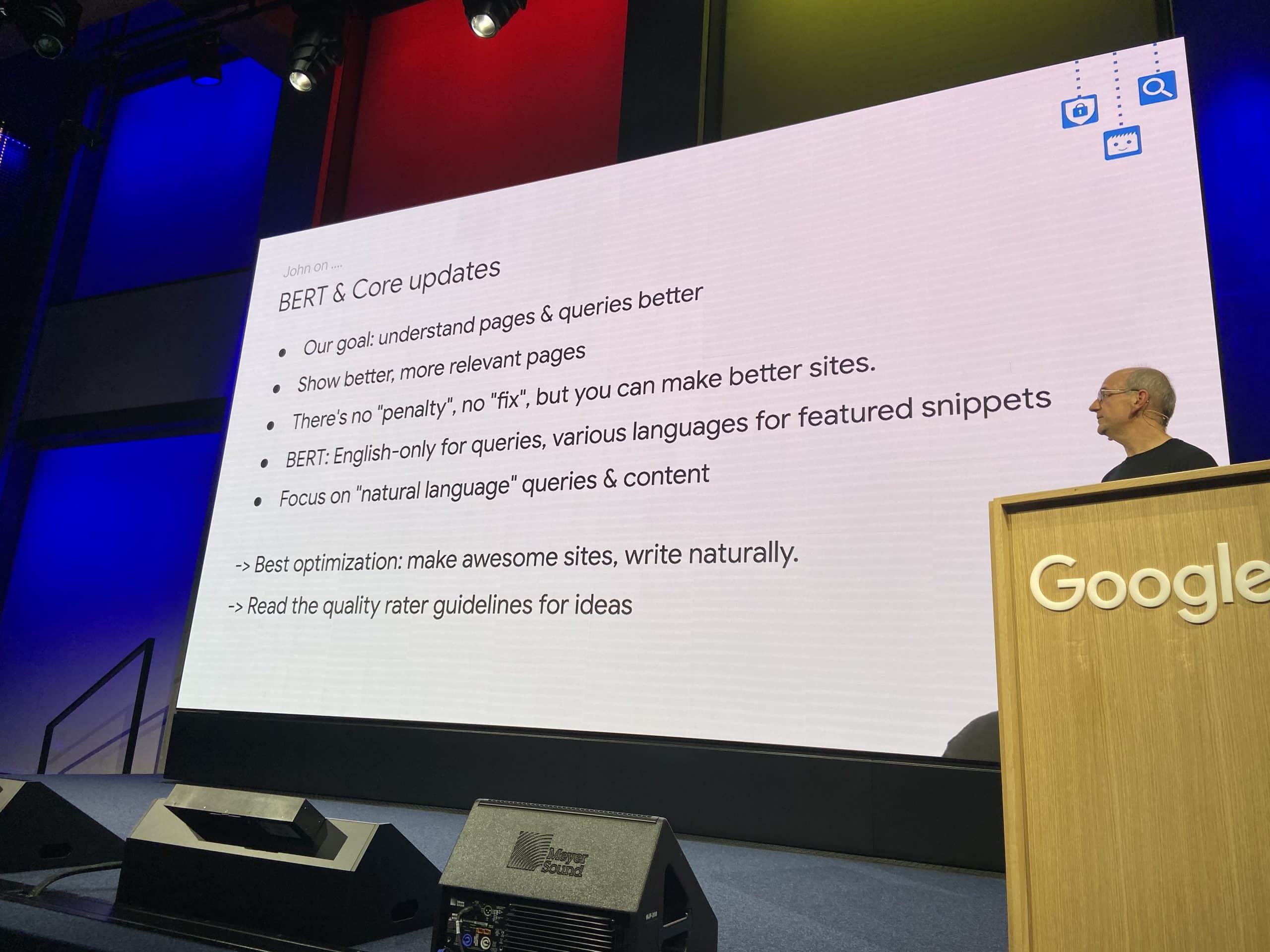

Core Updates & BERT

- Google’s focus is to better understand pages and queries better, and show better, more relevant results

- Core updates – always happen, but they will be bigger in future

- No specific fix for websites impacted by core updates – other than trying to make your website better (as a whole)

- BERT – tries to understand web search queries better and tries to identify which pages are relevant for search queries (more on BERT query understanding)

- Key focus on natural language for content – content should be written naturally

- H1 – H3 headings help Google understand even images (semantics of the sections of a page – associated with specific images)

- Read the Quality Rater Guidelines

Crystal ball and looking into the future

- More “core” algorithm updates

- Better understand of queries and pages

- “data-nosnippet” attribute

Javascript

A combination of Martin Splitt, John Mueller and community speaker Aleksej Dix talked about dynamic rendering and server-side rendering throughout their presentations. Google went into more detail on how rendering works, and Aleksej provided details on practical examples of what you can do using NUXT.js (more on that below).

What is tree shaking? Inevitably javascript increases to a certain size over time – tree shaking is removing code not in use anymore as it is removed in the bundle during the build process. Martin went on to talk about some examples below:

Martin went to talk on about how Google tries to read javascript; they try and given enough time to read the whole file (around 40 seconds) for rendering purposes –

- Google tries to cache javascript aggressively for purposes of indexation

- If there are excessive amounts of time/CPU taken, then Google “will cut […] off” and potentially stop working hard at reading a file

Javascript Takeaways:

- All of the general guidelines in SEO apply to javascript (links, URLs, images, structured data, crawling etc)

- Use real URLs (no “#” fragments in URLs) – 12% of single-page applications use fragment URLs

- Minimise embedded resources (speed up server for crawling, and rendering speed/CPU )

- Use testing tools & a local rendering crawler

- Link through to the Google Search JavaScript documentation

- Budget for rendering, use capable servers & robust JS

- “SEO is not dead” – off the back of saying that as JS becomes more popular, there will need to be SEO support to ensure that Google is able to efficiently crawl the javascript for indexation and ranking purposes

NUXT.JS // Server-Side Rendering

I won’t pretend to understand all that was talked about in the javascript slides, but the examples of the output from Aleksej Dix, who presented NUXT.JS, “a progressive framework based on Vue.js to create modern web applications.”, were very good at explaining this to non-devs such as myself.

It impacts SEO due to the fact that it renders content on both the client and server-side. I was very impressed with the example given as Alek showed a live example of how he had disabled javascript in the browser and the functionality and content remained. Usually with other libraries, if you disabled javascript in your browser, your screen would go white/blank, but in this instance, the content persisted – read more NUXT.js and serverside rendering.

- NOTE: I have also found out through a small personal project of mine that in order to get NUXT.js running, you need Node.js installed on your server (though this is also in Alek’s slides, so I just wasn’t paying enough attention). Not all shared web hosts have Node.js installed or enabled, but some do like A2 Hosting.

A view into how it works:

- Server-Side Rendering (SSR) means that some parts of your application code can run on both the server and the client. This means that you can render your components directly to HTML on the server-side (e.g. via a node.js server), which allows for better performance and gives a faster initial response, especially on mobile devices. Source.

The key takeaway here is to use NUXT.js on a Node.js enabled server – if you’re using vue.js. However, there are other options which Google has developed called “dynamic rendering” if NUXT.JS isn’t an option:

Dynamic rendering is good for indexable, public JavaScript-generated content that changes rapidly, or content that uses JavaScript features that aren’t supported by the crawlers you care about. Not all sites need to use dynamic rendering, and it’s worth noting that dynamic rendering is a workaround for crawlers.

Useful documentation:

- https://nuxtjs.org/guide/#server-rendered-universal-ssr-

- https://developers.google.com/web/updates/2019/02/rendering-on-the-web

- https://developers.google.com/search/docs/guides/dynamic-rendering

I will stop writing at this point and this has already taken me quite some time to write up and to remember all of the key takeaways, but there were obviously other presentations from community speakers such as Tobias Willman, and Izzi Smith.

News SEO & Featured Snippets

Tobias kindly shared his slides on Google slides which were based on ‘News SEO’ – more for those in the publishing space:

Izzi talked about featured snippets and content – with the key advice being:

- “become a well-structured, engaging, and satisfying resource with relevant authority and high accessibility”

All in all, it was a really good event and those in the Google Webmaster Trends team planned it excellently, so credit where credit is due. Everyone at the event was super friendly and chatty, so it was easy to chat to others as I went alone (as did many others), so it was a good way to get out of my comfort zone and speak to other likeminded people. 🙂

Questions & Answers:

Q (disclosure, this was my question): John, you had in one of your slides regarding the change in policy at Google around rel=”nofollow” and will be using that as a hint. How does this impact the web from a Google perspective? What will change as a result?

A: Gary responded to this as he was the co-author on the Google blog around the communications Google gave upon this announcement in September that they were going to roll this out on March 1st, 2020. The general theme of the response was around Gary saying that there will still be no PageRank flowing through from nofollow links, and said there were ‘other signals’ which could be used in order to make nofollow links useful for other Search purposes outside of PageRank.

–

Q: Will there be a separation of ‘voice-activated searches’ in Google Search Console?

A: No. Google explained (as they have before) that there is not much in terms of this insight being actionable, and that whilst you can’t separate this in Google Search Console, the voice data is part of Google Search Console reporting.

–

Q: Meta description and title tag length?

A: There is no single length or amount for title tag and meta data length because depending on screen size, this will vary – hence Google will not be giving out any guidelines on this.

–

Q: Will there be any updates to the APIs for Google Search Console?

A: Nothing planned. The response was that they weigh up engineering resources they have by whether there is a high amount of API usage vs all else, and right now, API usage isn’t high enough and will require good amounts of resources to improve.

–

Q: Is there a plan to increase (already increased to 16 months) the length of data in Google Search Console to 18 or 24 months?

A: No. A lot of hard work has already gone into increasing the maximum date range to 16 months from 3 months previously, and with that – increasing the speed in which Google is able to fetch this type of data. Reference back to Daniel Waisbergs reference to engineers in the Google Search Console team increasing the data collection speed by 10X.

–

Q: Why doesn’t Google use image recognition to help provide context for images so websites don’t need to provide alt text?

A: Google goes into detail around the fact that webmasters still need to provide the context within articles for images and that for accessibility purposes alt text will be required still for those with visual impairments – using screen readers.

–

Q: A page has a content page that updates dynamically based upon IP address, what is Google seeing and indexing?

A: Googlebot will see the US content version of that page as the canonical (through a US IP address); the discussion around creating country-specific sections/pages is where this conversation/answer ended – and potentially going down the hreflang route.

–

Q: What javacript library is the gold standard?

A: Martin responded by saying that this is not a good question. The output can be different regardless of the framework and is more centered around how it is deployed.